XBOSoft has been in the software testing business since 2006. As one of the first software testing companies solely dedicated to software testing (we don’t do anything else), we are often asked, “How much effort should be spent on testing?” Searching on the Internet, it seems no one knows. Here are a few answers that we found:

- “A naive answer is that writing tests carries a 10% tax. But, we pay taxes in order to get something in return.” The question is how much tax are you willing to pay? And what is the rate of return?

- Gartner in Oct 2006 stated that “testing typically consumes between 10% and 35% of work on a system integration project.” We looked at the original Gartner report and could not find enough context on the project background so it’s really difficult to ascertain the assumptions behind this statement and those of Gartner’s report.

- “Judge by yesterday’s weather. How long did it take last time? Are you trending longer or shorter? Each shop is different.” Although this comment seems whimsical, analyzing trends and doing empirical studies for your ‘shop’ is good advice.

- “There is no way to tell. You could call it 50% or 175% or more, and not be wrong. Why not make a rough guess and multiply by Pi? It won’t be much worse than any other answer you can make up.” Multiplying by Pi, maybe not good advice, but the take away from this comment is that you cannot be wrong, meaning that it really depends on the level of quality you are trying to attain.

Given all this, many software testing companies may answer with a 1:1 ratio. But the bottom line is that it’s just very difficult to estimate the amount of QA and testing that should accompany a software development effort. A lot of it depends on your company’s software development process, skills of your development staff, and complexity of the logic in the software you are developing. Obviously a simple website that presents information will need much less testing than an air traffic control system not only due to complexity of algorithms (and therefore coding logic), but also the risk involved should an error occur. Other factors include the number of platforms that you are telling your clients you support. And now with mobile platforms this seems to keep expanding exponentially. Contrary to what many think, automation is not always the answer and has its own issues as well in terms of skills needed to do the automation, the maintainability of the automation and the resources needed to keep it updated.

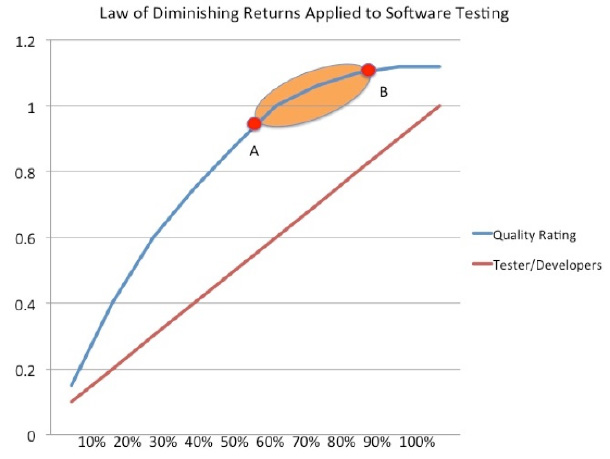

And not only does it depend on your own internal workings and skills, but the testing needed is also heavily dependent on your tolerance for risk and what the definition of ‘good enough’ is for you. And your tolerance for risk and your weight of importance for the quality of your software have an inverse relationship. If you have a high tolerance for risk, then you place less importance on your software’s quality. By applying a simple economic principle, ‘law of diminishing returns’, you can simply take some data points and determine how many software testers are needed over time by plotting the ‘measured’ software quality given different test efforts.

As you can see from the graph, the law of diminishing returns kicks in somewhere around point A. At that point, say at a ratio of 60% testers to developers (i.e. 10 developers and 6 testers), you start to get a diminishing return on adding more testers. Then at Point B, you can continue to add more testing resources but your return, or increase in quality, becomes less and less. Most organizations want to be somewhere in the Orange Zone. And all organizations want to know the answer as to where the Orange Zone is. The only way to do that is to take measurements and change the number of testing resources and arrive at a number that is right for your organization and its risk tolerance. As a software testing company, we do not have the right answer either, but we have worked with many organizations as both an outsourced software testing department and project based software testing partner. We usually answer the question with a range as you can see from this graph and work with our clients to find the right number for them based on their tolerance for risk, definition of ‘good enough’, resources available (and skills), their development process, and their development team’s historical data.

About the Author

Philip Lew As CEO, Mr. Lew guides XBOSoft (http://www.xbosoft.com/) in its overall strategy and operations. Previously, Mr. Lew worked at a large IT services provider as Chief Operating Officer.

Previously, Mr. Lew founded Pulse Technologies, Inc., a leader in contact center systems integration in Chantilly, VA. As the company’s President, Mr. Lew managed the overall growth of the company. In 1996, Pulse Technologies and its proprietary call center data warehousing software called soEZ were sold to EIS International (a public company listed on NASDAQ), a nationwide leader in contact center management systems.

Mr. Lew is an Adjunct Professor at Alaska Pacific University and Project Management College teaching graduate courses in software engineering, software quality assurance, IT project management, and IT Governance. Mr. Lew is a certified PMP.

He has presented at numerous conferences on contact center technology and web application usability, user experience, and quality evaluation. He has been published in Project Management Technology, Network World, Telecommunications Magazine, Call Center Magazine, TeleProfessional, and DataPro Research Reports. He received his B.S. and Master of Engineering degrees in Operations Research from Cornell University and his Ph.D. from Beihang University where his research area focused on software usability and quality evaluation. His research is currently focused on measuring user experience.