In a previous article, I provided details on the top two performance landmines to be aware – Size and Deployment Issues – as well as details on how best to avoid them.

In regards to Size, bigger pages inevitably take longer to load. You don’t want to spend endless test cycles optimizing your applications’ “business logic” performance when the major impact of page load times come from too much multi-media content, JavaScript files or content from 3rd party providers. To prevent this all it takes is to test your application from the perspective of your real end user. This allows you to prevent “super-sized” web pages from hitting the public web.

Missing files, incorrect access settings, and slow web server modules are common deployment mistakes that lead to functional as well as performance problems. Whether it’s missing files that lead to HTTP 4xx, misconfigured Web Server settings that severely impact performance and throughput, or JavaScript problems that happen on browsers that weren’t tested – all are common mistakes that can easily be avoided. Below are details about the third top performance landmine.

Landmine #3: Overhead through Logging/Tracing

We all know that logging is important, as it provides valuable infor¬mation when it comes to troubleshooting. Logging frameworks make it easy to generate log entries and, with the help of tools, it’s becoming easier to consume and analyze log files when it is time to troubleshoot a problem.

But what if there is too much log infor¬mation that nobody looks at, that just fills up your file system and—worse—seriously impacts your application performance and thereby introduces a new problem to you application?

Excessive use of Exceptions: In the test environment this might not be a big issue if only simulating a fraction of the load expected on the live production system. It must however be a warning to the one responsible for moving this application to production.

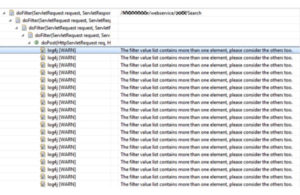

Too granular logging: Many applications have very detailed logging in order to make problem analysis easier in development and testing. When deploying an application in production people often forget to turn off DEBUG, FINE, FINEST, or INFO logs, which would flood the file system with useless log information and cause additional runtime overhead.

Advice for Production:

- Make sure you configure your log levels correctly when deploying to production.

- Test these settings in your test environment and verify that there are no DEBUG, FINE, … log messages still logged out to the file system.

- Show the generated log files to the people that will have to use these log files in case of a production problem and get their agreement that these log entries make sense and don’t contain duplicated or “spam” messages.

There is no doubt that performance is important for your business. If you don’t agree, maybe you recall these headlines:

- Target.com web site was down after promoting a new labels: Article on MSN

- Twitter was down and people complaining about it on Facebook: Huffington Post Article

- People stranded on Airports because United had a software issue: NY Time Article

Therefore the question is not whether performance is important or not, but how to ensure and verify your application performance is good enough. It’s important to go through proper test cycles so your project does not end up as another headline on the news. When asking people why they are not performing load tests you usually hear things like:

- We don’t know how to test realistic user load as we don’t know the use cases nor the expected load in production

- We don’t have the tools, expertise or hardware resources to run large scale load tests

- It is too much effort to create and especially maintain testing scripts

- Commercial tools are expensive and sit too long on the shelf between test cycles

- We don’t get actionable results for our developers

If you are the business owner or member of a performance team you should not accept answers like this.

Answer to: What is Realistic User Load and Use Cases

It’s not easy to know what realistic user load and use cases are if you are about to launch a new website or service. However, you need to make sure to do enough research on how your new service will be used once launched. Factor in how much money you spend in promotions and what conversion rate you expect. This will allow you to estimate peak loads.

Learn from Real Users: If you use Google Analytics, Omniture, or dynaTrace UEM to monitor your end users, you have a good understanding on current transaction volume. Factor in the new features and how many new users you want to attract. Also factor in any promotions you are about to do. Analyze your Web server logs as they can give you even more valuable information regarding request volume. Combining all this data allows you to answer the following questions:

- What are my main landing pages I need to test? What’s the peak load and what is the current and expected Page Load Time?

- What are the typical click paths through the application? Do we have common click scenarios that we can model into a user type?

- Where are my users located on the world map, and what browsers do they use? What are the main browser/location combinations we need to test?

For more insights into how you can push load testing in your development organization to ensure that there won’t be any business impact on new releases, click here.

About the Author

Andreas Grabner Technology Strategist, Compuware dynaTrace

Andreas Grabner has 15 years of experience as an architect and developer in the Java and .NET space. In his current role, Andi works as a Technology Strategist for Compuware/dynaTrace and leads the Center of Excellence team. In his role he influences the product strategy and works closely with customers in implementing performance management solutions across the entire application lifecycle. He is a frequent speaker at technology conferences on performance and architecture related topics and regularly publishes articles. Andi blogs on blog.dynatrace.com