Recorded 01/23/19

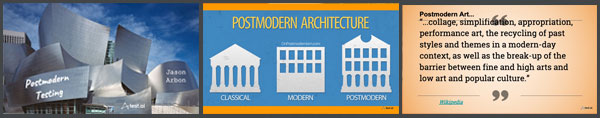

Modern testing isn’t keeping up with Agile and DevOps. Postmodern testing, like its artistic parallel, is the acceptance of this reality and practically combines the learnings of what works and what doesn’t in today’s software engineering landscape. This webinar shares the basic Dos and don’ts of postmodern testing that save time, money and make testers awesome members of the engineering team. Postmodern testing is informed by Jason’s experiences at Google, Microsoft, uTest, and test.ai. Don’t learn the hard way, join us for an introduction to postmodern testing and avoid the mistakes, and learn from what worked testing software for billions of users and thousands of applications.

Webinar Presentation:

Q&A

During the Q&A period we ran out of time and could not get to all of the questions. We sent these questions to Jason and he catagorized them and responded, quite thouroghly! Thanks to Jason!

01/23/19 STP Intro To Postmodern Testing Webinar Questions & Answers:

TESTING FRAMEWORKS

[Q] What is the difference between “testing” and “verification”?

[A] Jason: Simply, “testing” is trying to break or push the limits of a product. “Verification” is making sure that a product performs as expected under normal conditions.

[Q] What does a ratio of testing to verification mean?

[A] Jason: The Test vs Verification ratio is a simple framework on how to allocate resources when testing and verifying. If the ratio is say 7/10 (0.70), that means if you had 10 testers, 7 of them should be testing and 3 of them should be verifying the product. These are very different activities and mindsets. If you have only one person, 70% of their time should be spent testing and 30% verifying the product. Again, never go less than 20% or 80% in either direction. Too often teams spend all their time verifying these days and forget to test. 😉

[Q] Can you please elaborate on why BDD/Cucumber approach to testing is wrong?

[A] Jason: Most smart test teams are eventually drawn to the siren of BDD/Cucumber-based test definitions. This is like record and playback. It sounds so good because you can imagine some non-technical testers writing tons of tests with just a few programming testers writing code that translates these high-level test definitions into executable code. It is the siren song of test automation. Teams are short programming testers, and this sounds great… as great as a perpetual motion machine. However, the truth is that almost every implementation of this idea is a money and time trap, often delivering zero to a negative value. There are several reasons the BDD/Cucumber approach to testing isn’t a great idea:

- Forget my opinion :). The actual person who created Cucumber, Aslak Hellesøy says this isn’t a great idea: https://www.infoq.com/news/2018/04/cucumber-bdd-ten-years. He was still confident in his opinion as of just a couple of months ago. He says:

- “Today, Cucumber has become part of the standard toolset of many organizations. Some of them use it for BDD, but most of them use it just for “BDD testing”, whatever that means. It’s right there in the name — Behaviour-Driven *Development*.”

- “Our book also brought Cucumber to the tester communities, who saw it as a way to automate tests. Ironically, this also led to the misconception that BDD and Cucumber are for testing, and testing only. When Dan North came up with BDD, his goal was to make TDD more accessible, making it easier for developers to understand that TDD is a technique for designing and developing software, not testing it.”

- “Let’s face it — most people who use Cucumber don’t use it for BDD. They use it for testing, writing their tests afterward. If that’s your workflow, Cucumber isn’t significantly better than many other testing tools. If no customers, domain experts or product owners are going to read the Gherkin documents, I would usually recommend using a typical xUnit tool instead (JUnit, RSpec, NUnit, Mocha etc).”

- Compared to a developer or tester who is just writing tests, BDD/Cucumber adds a burden to several roles on the team: test writer, test translator, layer and developer.

- The test writer is blocked from writing any tests that depend on an action or validation step that isn’t yet built into the automation model layer.

- The automation model developer is often blocked by testability issues and access from the feature developer

- The feature developer often has to write testability interfaces for the test automation model developer (additional work that has nothing to do with the product)

- Dependencies: this set of dependencies means the test development work can become more complex than the original product feature roadmap/Gantt chart 🙂

- Because modern software is often redesigned or re-written from scratch periodically, the design, project management, and implementation overhead mean these projects yield little value for the investment. Redesigns often happen before the team yields value from all this work on its previous design, and then the BDD/Cucumber process has to restart again with each redesign. And to restart again, you’re assuming that the testers are still there, which is not often the case!!

[Q] Are there good links for inside-out testing?

[A] Jason: There is a mention of this approach in the “How Google Tests Software” book, it is an approach from the Gmail team at that time. Also, the cypress.io framework is a hybrid of outside-in and inside-out approaches. Longer term, I hope to work on an AI-first, inside-out, framework, but that will be a while off. Really, there isn’t much more complexity in building such a testing approach, just parameterize production code to include the test code. 🙂 Note: there are security aspects to using this in production, I’d suggest you think through that carefully… at least only allow the inclusion of test code when using an authorized account for example.

[Q] Any thoughts on how things like Chaos Engineering fit into postmodern testing?

[A] Jason: Chaos engineering is really just old school fault injection applied to microservices and deployment. It is useful, but the faults it emulates are not always reflective or predictive of real-world outages. Also, care should be spent to make sure the time spent on this approach might not be better spent on other testing and quality activities. FWIW, the strongest advocate of this approach, Netflix, still has more production downtimes by far than say GMail: http://currentlydown.com/netflix.com, https://downdetector.com/status/gmail which spends more time focusing on resilience by design, and strong DevOps verification scripts and instrumentation during deployment.

[Q] What is wrong with static analysis?

[A] Jason: Simply stated, static analysis generates more false positives than it is worth. Not only are there so many false positives, but the engineering time spent doing this analysis of the output is almost always better spent on other engineering efforts to improve quality. There are people looking to apply AI to static analysis, but from I’ve seen, even those efforts will take a long time until they prove net positive use of engineering time and money. Quick example… at Google, we stopped doing static analysis of the chrome/ChromeOS code base and it is a relatively high-quality browser!

[Q] Can you let us know where we can use the cypress.io frameworks (i.e use with protractor or selenium)?

[A] Jason: This is the very reason I have avoided talking about frameworks openly in the past 😉 What I meant to convey is that cypress.io is much closer to ‘inside-out’ testing than most frameworks. But… it is still an outside-in framework and doesn’t deploy the test code with the product code, which is a fundamentally different architecture. I’d recommend folks actually not ramp on cypress, but rather work with the development team to just inject their test code into the application under test. If you want orchestration across test instances, simply load the product/app with different test parameters. If you want pass/fail to report, simply report in the app’s own UI, or simply send it to a data store for analysis. Simple. The time spent ramping and deploying cypress.io is probably best spent working with the development team to deploy an inside-out approach. Cypress is almost ‘worse’ as it almost looks like inside-out testing and might distract folks from the pure and simple approach of inside-out. But, most testers fear production code and collaborating with developers… so many people will flock to cypress.io 🙂

[Q] Hi Jason, may I know what approach or test tools best-fit business intelligence testing? Specifically IBM Cognos, thanks.

[A] Jason: Generally, there are dedicated tools out there for testing Cognos and I’d recommend just using those for such a vertically integrated, archaic product. If you want to go deep, I’d suggest simply testing the data and not the UI of the project by using unsupervised/k-means-like approaches to identify anomalies. Or, use LSTMs to predict/test for sequences of data that appear in the product.

At this time, I’d think AI/ML for testing a specific deployment of Cognos would be a net negative ROI and would become a project on its own.

AI IN TESTING

[Q] How is AI or ML going to change the way we test today?

[A] Jason: Um, there’s not a quick answer. I would suggest analogies–how did the steam engine affect farmers? How did the loom impact sewing? How did the telescope impact astronomy? How did the transistor impact computing? Tech changes of the past 20 years (pc, mobile, cloud, Moore’s law, VR/AR, etc.) have only made testing harder. Fundamentally AI is a way to create software from examples of human judgments. This is what testing is at its core. AI is the first dramatic tech shift that seems almost custom-built to dramatically change testing.

[Q] Is AI going to replace automation testing in near future or it will complement the way we work today?

[A] Jason: Both. It will replace most of the mundane basic testing given re-use and scale and adaptability of AI-based testing approaches. AI is fundamentally an exponential technology, so the impact on testing will be nothing… then more nothing… then something… and then wow! Ironically, the subjective measure of quality is most easily replicated by AI. Regression and feature testing are also ripe targets. AI will ultimately do what people originally featured automation. To date, automation has been ‘linear’ because we applied poor engineers to hard-coded projects, which is why it never met expectations of impact. AI will replace many human judgments of quality, but it will be very difficult for AI to summarize the intersection of functional quality, competitive quality, and business requirements. It will also be difficult for AI to assess innovative experiences and communicate quality issues into the team, management and to users. At a gross level, I think individual testers and test directors will disappear, but the test leads of today will survive and their data-interpretation role will live on (not human management). That is the test role that becomes even more valuable, at least until machines become conscious, then all bets are off. =)

[Q] Is AI going to replace non-functional testing?

[A] Jason: Based on my experiments, the most qualitative aspects of quality (i.e. usability, trustworthiness, etc.) are more easily handled by AI than classic approaches. (Search for that content on youtube…too much for an FAQ :). As for performance, load, etc, AI can drive the application ‘better, faster, cheaper’ than classical approaches, and even analyze the results better than humans via unsupervised learning to analyze the logs of such test runs, and the re-uses of AI approaches means benchmarking of quality is now plausible. This is a hugely open-ended question for an FAQ, but hope this is a good start 🙂

[Q] Maybe I am wrong, but it looks like testing as a separate part is going away and developers will do the testing itself with AI-driven tools?

[A] Jason: The easiest way to see what will unfold in the next few years IMHO is to think that most ‘individual contributor’ testing work will move to either AI or developers. Most manual testing will move to AI-based approaches. Most custom test automation will move to folks who can write production code (whatever you call that role), but there will be an ‘elevation’ in the status and pay of testers who can synthesise an approach and analysis of all this testing activity and data to deliver a ‘ship/no-ship’ recommendation. There will be fewer upper management test-only roles, fewer manual testing roles, fewer test script development roles, but more and better paid ‘test lead’-like roles in the future.

[Q] Perhaps I missed the main point, but if AI will soon do most software testing, why do you say ‘don’t leave the profession’?

[A] Jason: The hardest problem with data-driven, AI-driven testing is knowing what problems to apply the techniques to, and most importantly evaluating the value and correctness of those approaches. This requires experienced testers. We need the best and most motivated testers to stay in the profession, or we risk another lost decade in data/ML for data/ML sake while still not being able to move the needle on quality. Frankly, the last 15-year focus on automation made this mistake. The best manual testers left because they were intimidated by programming, and the best engineers left to be developers because of higher pay and prestige. I hope the same doesn’t happen in this AI revolution. While AI is going to do most of the mundane and repetitive parts of software testing, we still need our best testers to lead us to defining which hard quality problems we need to solve.

[Q] At some point, in this presentation, many, or most conventional approaches to automation have been disparaged… there only seem to be a few approaches that you recommend, most notably AI. For all the AI approaches I have seen presented in conferences and webinars, I have not seen an approach that could replace conventional automation. Can you elaborate on how teams are using AI, how can this be applied by those of us outside companies like Google?

[A] Jason: Yes, that is correct. If there are automation approaches not mentioned, I would probably ‘disparage’ or ‘discourage’ them as well. I’ve worked on most of these approaches in the past and seen the mid-long term consequences of pursuing them. The key problem with most automation approaches is simply that they create a new ‘mini-project’ within the organization, run by junior developers, often with fill-in project management, and separate from the larger team and product. They always sound great to testers because they get to create a new little project of their own and get to ‘play’ developer or ‘pm’ with little oversight. I’ll admit I’m guilty of this myself and it can be very fun. But this creates a silo of poorly designed, implemented and managed work that is siloed from the rest of the team. Often the best tests are simply code that live in the same tree, same language, and often written by the same folks that write the product features. Most modern test frameworks and strategies do not do this.

That said, I agree that most AI-based test automation approaches are ‘early’ and are not ready for prime time. Frankly, most test automation that is marketed as ‘AI’ is not actually an AI-first approach either. What I meant to say is that I see AI-based test approaches coming sooner than folks expect and they will dramatically change the landscape of testing. Today, I would simply suggest folks forget about frameworks and just pursue an inside-out approach to testing via experienced developers. Meanwhile, to future-proof themselves, I’d suggest test folks ramp on machine learning approaches and look for niche ways to experimentally apply it to their hardest business-specific testing/quality problems.

[Q] How does AI-driven testing relate to performance/load testing?

[A] Jason: A few ways:

- Performance testing: Can be measured in parallel as AI generated tests execute… meaning more performance coverage within an app

- Load testing: Generally AI can generate ‘more’ valid and diverse traffic for load with less effort. But that isn’t really interesting…

- Server data/logs analysis via unsupervised learning: post load test, use unsupervised/K-means clustering to identify misbehaving servers without extensive heuristics

TESTING IN ORGANIZATIONS

[Q] Regarding separating yourself from Agile processes, a lot of testers do not have the autonomy to do this. Do you have any suggestions for strategies where you are forced to participate in an Agile process while still maintaining your sanity? Maybe we need a sub-genre of Postmodern testing to subvert Agile. PostPunk Testing perhaps?

[A] Jason: Sub-versive, not Sub-genre 🙂 Testers are often left alone, unguarded, unwatched. It can be a good or a bad thing. You can go through the ‘agile motions’ and even do the right thing when folks aren’t looking… but that’s not healthy in the long-term.

Short-term Suggestion You should work to build the case that stepping outside the agile process would be better for QA. Every organization has both similar and unique challenges and I bet that you see the inefficiencies firsthand. If you believe this, you should advocate for it.

Long-Term Suggestion If you worry it will cost you your job, I’d frankly suggest that you find a different place to work where you can deliver the value you think you can truly deliver, and work on projects and teams that will appreciate your work. I know this may be hard to swallow and I know that not everyone is in a position to do so at this moment. However, there is a great career risk where your resume fills up with so-so projects of average quality and where your skillset doesn’t grow. Those two issues are a threat to your long-term career and will impact your ability to get the next job. Software testing jobs are growing annually at about 20%, but many companies still can’t find enough candidates to hire. My advice is to find and work in a healthy place.

[Q] How can I learn automated testing if I’m unable to get on-the-job training?

[A] Jason: While on-the-job training is a great way to learn, it’s not a reliable channel to develop new skills. If you are really dedicated, you will steal time during the work day and off-hours / continuous education to learn to become an awesome programmer. Interestingly, the web and most apps are ‘open’. You can also test any app in the world that you want to practice on without permission from anyone. Frankly though, if you are looking to learn on the job versus your own effort outside of work, it has always seemed to be detrimental to the people wanting to learn and the company encouraging this ‘partially motivated learning’.

For those that are able to… I’d suggest retraining to be a programmer. Learning to program is a serious endeavor and almost no one masters it even when focused full time or going through college programs. If that’s not possible to do full time, and if you are hungry enough, you can try this off-hours at a slightly slower rate. But don’t rely on ad-hoc, slow, partial training. Test automation is often more difficult than normal programming, you can’t successfully do it on ‘learning Fridays’ during your day job. Companies often try to convert non-programming testers slowly into programming testers, but this is really often ‘fake’ career growth to keep the testers around, rarely yields great results for the students, and rarely creates a great, positive ROI test programmer at the end of the day. If you are motivated, you will do this outside of your day job…. Sorry, just reality.

[Q] Thanks for the honesty. If there was one take away for someone in an organization that’s doing a lot of the ‘Don’t’s’ to start trying to steer a titanic in a slightly different direction? I think a lot of us see a lot of the problems that may be looming but wonder where some of the best small changes might be.

[A] Jason: Simple, I think. Either ‘just do it’, just do what is right without asking for permission, or move to a better team. Testers have far more latitude to experiment than they think as no one really wants to think about testing, or knows when it is or should be done. 🙂 If you aren’t comfortable with that, the next best thing is to just move to a team that would be supportive. Ask around within your company, or outside, and find an environment that agrees with your thinking. If the team agrees with your thinking, you will grow faster, and if you are right, build a product you are more proud of and will be proud to tell you next employer about. 🙂 Don’t stay still in an unhealthy organization.

[Q] Is a multidisciplinary (Dev Build Test Deploy Release) TCoE (Testing Center of Excellence) worth having to discuss Design-for-Test opportunities, share best practices, toolchain integration ideas?

[A] Jason: I don’t think multidisciplinary TCoE’s are generally useful. They sound good on paper, but one of the key issues in ‘modern’ testing is that so much energy is spent on non-testing activities. And, these are worse than test-only TCoE’s. These efforts rarely manifest in truly more efficient testing/quality solutions. Can someone quantitatively point to one? 🙂 Too often these efforts are the result of individuals seeking to broaden their impact, visibility, and role play in more architect or product manager or dev manager roles… for their own career, not for the greater good. Most of these I have seen, even at Microsoft and Google, were eventually dismantled due to lack of effectiveness, and those folks on the TCoE’s were left seeking a new, more mundane role in the company. A lesson I learned at Google is to speak with code. These TCoE-like groups should be ad-hoc, opportunistic, and deliver value rather than new organizational complexity IMHO. Most often the company, product quality and a customer benefit more by spending that time and resources into actual testing work on a more focused area.

OTHER

[Q] How can I get a copy of the deck?

[A] The deck is provided above or you can download it here: https://www.slideshare.net/testdotai/intro-to-postmodern-testing

[Q] Can test.ai’s platform test standalone apps (non-web apps/non-mobile)… apps with several modules?

[A] Jason: It could, but we’re currently focusing on just native mobile apps at this time. That is a big enough problem. 🙂 Technically, it could work on anything that has a user interface that can be screenshotted, and it has the ability to send clicks/taps and text to an x/y coordinate.

[Q] How do we get in on the test.ai beta download?

[A] Jason: Test.ai is currently focusing on testing at large scale. At the scale of companies that have hundreds or more apps to worry about at a time, as that is the key advantage of the AI-based approach: scale and reuse. That said, if you test a ‘top’ app, it is likely we will have something for you in the near future, stay tuned. If you are really adamant, willing to be an early adopter and pay/work-with a 3rd party, feel free to contact me, we have some room for a small set of folks in a queue for that. Stay tuned, more fun stuff coming, we just aren’t a traditional test vendor. 🙂

Our Speaker:

Jason Arbon – CEO, test.ai Jason Arbon is the CEO at test.ai where his mission is to test all the worlds apps. Google’s AI investment arm lead test.ai’s latest funding round. Jason previously worked on several large-scale products: web search at Google and Bing, the web browsers Chrome and Internet Explorer, operating systems such as Windows and ChromeOS, and crowd-sourced testing at auTest.com. Jason has also co-authored books such as How Google Tests Software and App Quality: Secrets for Agile App.

Jason Arbon – CEO, test.ai Jason Arbon is the CEO at test.ai where his mission is to test all the worlds apps. Google’s AI investment arm lead test.ai’s latest funding round. Jason previously worked on several large-scale products: web search at Google and Bing, the web browsers Chrome and Internet Explorer, operating systems such as Windows and ChromeOS, and crowd-sourced testing at auTest.com. Jason has also co-authored books such as How Google Tests Software and App Quality: Secrets for Agile App.

See Jason at STPCon Spring 2019

Jason will be delivering a Workshop, Keynote and Session at the Hyatt Regency San Francisco Airport Hotel – April 1-4, 2019. Join us for 4-days of all things Software Testing, QA, QE, Automation, Machine Learning and more… See the full program at www.STPCon.com

Workshop: Testing Software with Machine Learning

How do you train an AI bot to do your software testing? Integrating AI into your testing activities can be intimidating, but in reality, it is pretty easy–it just takes a lot of ‘grungy’ work. Learn how to directly apply AI to real-world testing problems, without having a Ph.D. in computer science. [Read More]

Keynote: Testing all the World’s Apps with AI

How can one system test all the world’s apps? Mobile and Web. Jason shares learnings from applying AI techniques for doing just that. Learn how AI can help with the problems of identifying elements in applications, and the sequencing of test steps for regression testing. [Read More]

Session: Testing AI-Based Systems

People are adding “AI” to everything these days —but the big question is how to test it. We are already seeing AI products go awry. Engineers working on AI fear the little Frankenstein minds they created might make some bad decisions. Like traditional software, this new AI-based software needs to be tested. [Read More]

Workshop: Testing Software with Machine Learning How do you train an AI bot to do your software testing? Integrating AI into your testing activities can be intimidating, but in reality, it is pretty easy–it just takes a lot of ‘grungy’ work. Learn how to directly apply AI to real-world testing problems, without having a Ph.D. in computer science. [Read More]

Keynote: Testing all the World’s Apps with AI How can one system test all the world’s apps? Mobile and Web. Jason shares learnings from applying AI techniques for doing just that. Learn how AI can help with the problems of identifying elements in applications, and the sequencing of test steps for regression testing. [Read More]

Session: Testing AI-Based Systems People are adding “AI” to everything these days —but the big question is how to test it. We are already seeing AI products go awry. Engineers working on AI fear the little Frankenstein minds they created might make some bad decisions. Like traditional software, this new AI-based software needs to be tested. [Read More]

Speaker Details:

Twitter: @jarbon

LinkedIn: Jason Arbon

Website: test.ai

Facebook: Jason Arbon

Prior Speaking Events: Selenium Conf, TestBash, StarEast, StarWest, Agile DevOps, etc.

Hi Jason,

Awesome presentation with some really disruptive ideas. If I may be allowed a couple of questions?

1. Your distinction between verification checks and forensic tests is very useful, as is the guideline for how to split effort & resources between them in various situations. However, I wonder whether we have that VvT ratio definition correct. You argue that a product with a lot of code churn should be more heavily verification-checked than one released once a year – yet you give it a VvT ratio of 100:1 instead of 1:1. Have I interpreted that table correctly?

2. You expressed the opinion that static analysis was a waste of time – yet my experience is that it can reveal a lot of questionable coding practices with very little effort. Agreed, it can be a thankless task to try to deal with every little warning that the compilers, style checkers and flow analysers produce, but at least they prompt the programmer to think at least once about each item (e.g. unreachable code, missing braces, unintended assignments etc.) What’s your rationale for obviating these tools?

Hi Immo

1. Good catch, thanks! the 100’s should have been zeros– I think a slide translation issue happened. testing is good 😉 Inverse :-/ fixed.

2. Static analysis often generates more false positives than it is worth, especially in light of the fact that the engineering time investigating could/should be spent in so many other things.

Jason,

I like your statements about BDD/Cucumber. It has become the new “automagic”. I assume it was Aslak Hellesoy you were chatting with. I’ve seen a couple of blog posts by him about his misgivings of the use/abuse of Cucumber/Gherkin for testing.

Hi Jim, yup, it was Aslak. I had to catch him at a speaker dinner to triple check he feels that way before i mouthed off about it more widely 🙂

Jason, I really liked your overview and analysis and agree with al you stated as well. How many people think BDD is some savior, but it creates more work and maintenance than it’s worth. Yet I hear “but it’s FREE!” and I say “it’s the opposite of free”. Anyway thanks for the informative webinar!